其实说个实话,软RAID也好,mdadm也罢,基本就是个玩具,实际的生产环境没多少人会去用软RAID的,都是直接上阵列卡。但玩具也有玩具的价值对嘛,我觉得组软RAID的话,组个RAID0就好了,提升下硬盘性能就行了,其他的什么RAID1/5/10没多大意义,真要是出了啥问题,数据不一定恢复的出来的,而且性能也是个问题。不过有个词说的挺好,叫聊胜于无。。

这篇文章主要演示一下,假设你组了一个软RAID1,如果需要在阵列里面扩容该如何做,以及如果阵列中某块硬盘坏掉了,如何恢复数据并替换新盘到阵列中。

安装mdadm:

yum -y install mdadm

给两块新盘创建RAID分区:

fdisk /dev/vdb fdisk /dev/vdc

流程:

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-20971519, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-20971519, default 20971519):

Using default value 20971519

Partition 1 of type Linux and of size 10 GiB is set

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

查看硬盘状态:

[root@softraid ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sr0 11:0 1 918M 0 rom vda 252:0 0 10G 0 disk ├─vda1 252:1 0 488M 0 part /boot └─vda2 252:2 0 9.5G 0 part ├─centos_softraid-root 253:0 0 8.6G 0 lvm / └─centos_softraid-swap 253:1 0 976M 0 lvm [SWAP] vdb 252:16 0 10G 0 disk └─vdb1 252:17 0 10G 0 part vdc 252:32 0 10G 0 disk └─vdc1 252:33 0 10G 0 part

创建RAID1阵列:

mdadm -C /dev/md0 -l raid1 -n 2 /dev/vd[b-c]1

查看阵列状态:

mdadm -D /dev/md0

一个RAID1阵列就创建好了:

/dev/md0:

Version : 1.2

Creation Time : Mon Jun 24 21:39:27 2019

Raid Level : raid1

Array Size : 10475520 (9.99 GiB 10.73 GB)

Used Dev Size : 10475520 (9.99 GiB 10.73 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Mon Jun 24 21:40:19 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : softraid:0 (local to host softraid)

UUID : 1fb8fc7b:7851fcc4:f4818bdb:0c121770

Events : 17

Number Major Minor RaidDevice State

0 252 17 0 active sync /dev/vdb1

1 252 33 1 active sync /dev/vdc1

创建LVM逻辑卷:

pvcreate /dev/md0 vgcreate imlala-vg /dev/md0 lvcreate -l 100%FREE -n imlala-lv imlala-vg

查看LV完整的卷名:

lvdisplay

回显如下:

--- Logical volume --- LV Path /dev/imlala-vg/imlala-lv LV Name imlala-lv VG Name imlala-vg LV UUID hDSh7z-pvgG-1CYY-H6BT-DEDj-ZIit-52EpdL LV Write Access read/write LV Creation host, time softraid, 2019-06-23 21:41:34 +0800 LV Status available # open 0 LV Size <9.99 GiB Current LE 2557 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:2

制作XFS文件系统并挂载:

mkfs.xfs /dev/imlala-vg/imlala-lv mkdir -p /imlala-data mount /dev/imlala-vg/imlala-lv /imlala-data

查看挂载状态:

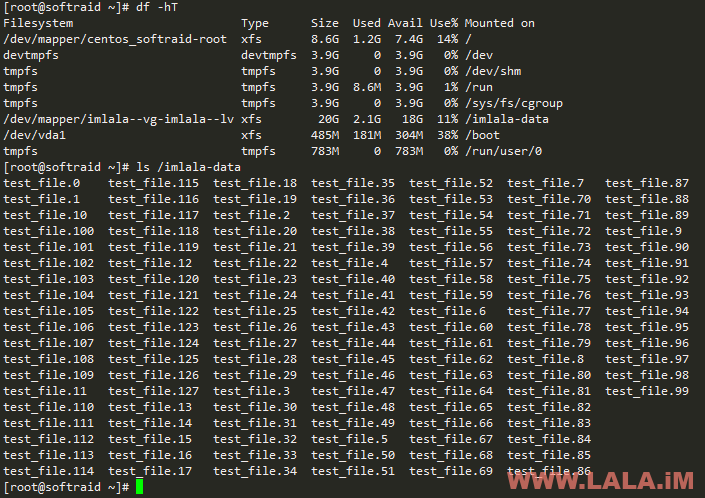

[root@softraid ~]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos_softraid-root xfs 8.6G 1.2G 7.5G 14% / devtmpfs devtmpfs 3.9G 0 3.9G 0% /dev tmpfs tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs tmpfs 3.9G 17M 3.9G 1% /run tmpfs tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/vda1 xfs 485M 181M 304M 38% /boot tmpfs tmpfs 783M 0 783M 0% /run/user/0 /dev/mapper/imlala--vg-imlala--lv xfs 10G 33M 10G 1% /imlala-data

没问题的话,加到fstab:

echo "/dev/imlala-vg/imlala-lv /imlala-data xfs defaults 0 0" >> /etc/fstab

你可以发现上面这些步骤和我们之前创建RAID0阵列时没多大区别,但如果现在我们服务器内又要加硬盘了,怎么给现有的RAID1阵列扩容呢?RAID1阵列扩容和RAID0阵列扩容就大不相同了。

其实我的建议是直接再组一个新的阵列,不要去动现有的阵列了,因为在mdadm中,给RAID1阵列扩容非常麻烦,但有一些特殊情况,不得不在现有阵列上扩容,有没有办法呢?

办法是有的,在mdadm中给RAID1扩容可以使用替换的方法,什么意思?比如你现在可以看到我们是两个10G的盘组的RAID1,这时候我们再添加两块比10G容量大的硬盘就可以实现扩容。

特别需要注意的是,RAID1最好使用偶数盘,比如2/4/6/8这样的盘位,奇数盘组了没什么意义,或者说奇数盘组RAID1你会浪费掉余下的硬盘。这里我们就增加两块20G的硬盘替换掉之前的两块10G盘,达到一个扩容的目的。

这里为验证扩容不会影响到现有数据,我先往挂载目录内写点数据:

sysbench --test=fileio --file-total-size=2G prepare

可以看到我往里面写了2G数据:

[root@softraid imlala-data]# df -hT Filesystem Type Size Used Avail Use% Mounted on /dev/mapper/centos_softraid-root xfs 8.6G 1.2G 7.4G 14% / devtmpfs devtmpfs 3.9G 0 3.9G 0% /dev tmpfs tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs tmpfs 3.9G 8.6M 3.9G 1% /run tmpfs tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/mapper/imlala--vg-imlala--lv xfs 10G 2.1G 8.0G 21% /imlala-data /dev/vda1 xfs 485M 181M 304M 38% /boot tmpfs tmpfs 783M 0 783M 0% /run/user/0

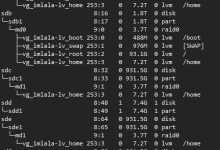

还是检查一下当前硬盘状态,可以看到2块船新的硬盘躺在那里等我们开操了:

[root@softraid ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 918M 0 rom

vda 252:0 0 10G 0 disk

├─vda1 252:1 0 488M 0 part /boot

└─vda2 252:2 0 9.5G 0 part

├─centos_softraid-root 253:0 0 8.6G 0 lvm /

└─centos_softraid-swap 253:1 0 976M 0 lvm [SWAP]

vdb 252:16 0 10G 0 disk

└─vdb1 252:17 0 10G 0 part

└─md0 9:0 0 10G 0 raid1

└─imlala--vg-imlala--lv 253:2 0 10G 0 lvm /imlala-data

vdc 252:32 0 10G 0 disk

└─vdc1 252:33 0 10G 0 part

└─md0 9:0 0 10G 0 raid1

└─imlala--vg-imlala--lv 253:2 0 10G 0 lvm /imlala-data

vdd 252:48 0 20G 0 disk

vde 252:64 0 20G 0 disk

还是和之前一样先创建RAID分区:

fdisk /dev/vdd fdisk /dev/vde

流程:

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-20971519, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-20971519, default 20971519):

Using default value 20971519

Partition 1 of type Linux and of size 10 GiB is set

Command (m for help): t

Selected partition 1

Hex code (type L to list all codes): fd

Changed type of partition 'Linux' to 'Linux raid autodetect'

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

分区完成之后,将两块20G的硬盘加到阵列,设备数由2变为4:

mdadm --grow /dev/md0 --raid-devices=4 --add /dev/vdd1 /dev/vde1

查看阵列状态:

mdadm -D /dev/md0

等待Rebuild完成:

/dev/md0:

Version : 1.2

Creation Time : Mon Jun 24 21:39:27 2019

Raid Level : raid1

Array Size : 10475520 (9.99 GiB 10.73 GB)

Used Dev Size : 10475520 (9.99 GiB 10.73 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Mon Jun 24 22:10:33 2019

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 4

Failed Devices : 0

Spare Devices : 2

Consistency Policy : resync

Rebuild Status : 53% complete

Name : softraid:0 (local to host softraid)

UUID : 1fb8fc7b:7851fcc4:f4818bdb:0c121770

Events : 32

Number Major Minor RaidDevice State

0 252 17 0 active sync /dev/vdb1

1 252 33 1 active sync /dev/vdc1

3 252 65 2 spare rebuilding /dev/vde1

2 252 49 3 spare rebuilding /dev/vdd1

一定要等待阵列Rebuild完成之后,再将旧的两块10G盘从阵列中删除,先将要删除的硬盘标记为故障:

mdadm /dev/md0 -f /dev/vdb1 mdadm /dev/md0 -f /dev/vdc1

然后就可以安全的删除了:

mdadm /dev/md0 -r /dev/vdb1 mdadm /dev/md0 -r /dev/vdc1

将阵列中的设备数量还原为2:

mdadm --grow /dev/md0 --raid-devices=2

最后扩容阵列大小:

mdadm --grow /dev/md0 --size=max

如果正常,终端shell会回显一条提示:

mdadm: component size of /dev/md0 has been set to 20961280K

此时查看阵列状态:

mdadm -D /dev/md0

可以看到阵列容量从10G提升到20G了:

/dev/md0:

Version : 1.2

Creation Time : Mon Jun 24 21:39:27 2019

Raid Level : raid1

Array Size : 20961280 (19.99 GiB 21.46 GB)

Used Dev Size : 20961280 (19.99 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Mon Jun 24 22:17:12 2019

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : softraid:0 (local to host softraid)

UUID : 1fb8fc7b:7851fcc4:f4818bdb:0c121770

Events : 61

Number Major Minor RaidDevice State

3 252 65 0 active sync /dev/vde1

2 252 49 1 active sync /dev/vdd1

(重要!)现在我们还需要将RAID阵列的更改进行保存:

mdadm --detail --scan --verbose >> /etc/mdadm.conf

接着重启LVM服务:

systemctl restart lvm2-lvmetad

查看PV:

pvdisplay

还是10G没有变化:

--- Physical volume --- PV Name /dev/md0 VG Name imlala-vg PV Size 9.99 GiB / not usable 2.00 MiB Allocatable yes (but full) PE Size 4.00 MiB Total PE 2557 Free PE 0 Allocated PE 2557 PV UUID EYTFPQ-2Nb3-hCWB-QRVh-ByZd-3K5Q-3TnyZZ

所以现在需要扩容PV:

pvresize /dev/md0

再次查看,可以看到是20G了:

--- Physical volume --- PV Name /dev/md0 VG Name imlala-vg PV Size <19.99 GiB / not usable 0 Allocatable yes PE Size 4.00 MiB Total PE 5117 Free PE 2560 Allocated PE 2557 PV UUID EYTFPQ-2Nb3-hCWB-QRVh-ByZd-3K5Q-3TnyZZ

最后我们扩容LV逻辑卷即可:

lvresize -rl +100%FREE /dev/imlala-vg/imlala-lv

完成之后,查看挂载状态和之前写入的文件,可以看到一切正常:

RAID1是有容灾机制的,如果阵列中的某一块硬盘坏掉了,你可以使用和上面同样的方法先将坏掉的硬盘标记为故障,然后使用新盘替换掉旧盘即可完成阵列的重建。

荒岛

荒岛